Browse Source

git-svn-id: http://newslabx.csie.ntu.edu.tw/svn/Ginger@2 5747cdd2-2146-426f-b2b0-0570f90b98ed

master

15 changed files with 438 additions and 362 deletions

Split View

Diff Options

-

+1 -1trunk/00Abstract.tex

-

+18 -3trunk/01Introduction.tex

-

+11 -6trunk/02Background.tex

-

+31 -17trunk/03Design.tex

-

+12 -9trunk/04Evaluation.tex

-

+58 -30trunk/Main.aux

-

+15 -3trunk/Main.bcf

-

BINtrunk/Main.dvi

-

+285 -271trunk/Main.log

-

BINtrunk/Main.pdf

-

BINtrunk/Main.synctex.gz

-

+7 -22trunk/Main.tex

-

BINtrunk/figures/ThermalAtHome.pdf

-

BINtrunk/figures/compareToJpeg.pdf

-

BINtrunk/figures/separate.png

+ 1

- 1

trunk/00Abstract.tex

View File

| @ -1,5 +1,5 @@ | |||

| \begin{abstract} | |||

| In a IoT environment, there are many devices will periodically transmit data. However, most of the data are useless, but sensor itself may not have a good standard to decide transmit or not. Some static rule maybe useful on specific scenario, and become useless when we change the usage of the sensor. In this paper, we want to present a method to reduce the file size of thermal sensor which can sense the temperature of a surface and output a two dimension gray scale image. In our evaluation result, we can reduce the file size to $50\%$ less than JPEG when there is $0.5\%$ of distortion, and up to $93\%$ less when there is $2\%$ of distortion. | |||

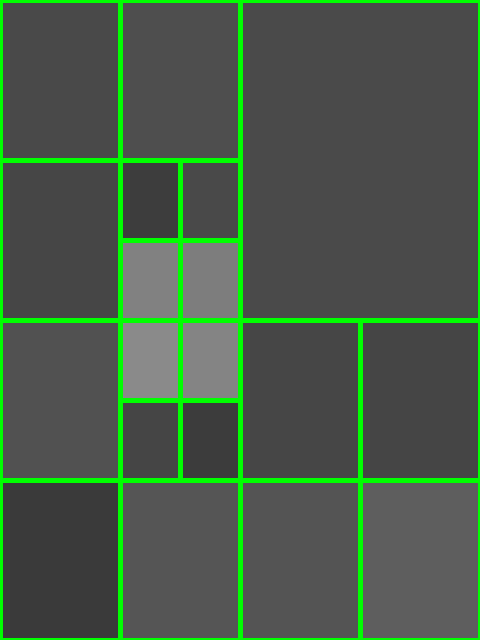

| In a IoT environment, many devices will periodically transmit data. However, most of the data are redundant, but sensor itself may not have a good standard to decide transmit or not. Some static rule maybe useful on specific scenario, and become ineffective when we change the usage of the sensor. Hence, we design an algorithm to solve the problem of data redundant. In the algorithm, we iteratively separate an image into some smaller regions. Each round, choose a region with highest variability, and separate it into four regions. Finally, each region has different size and uses its average value to represent itself. If a area is more various, the density of regions will be higher. In this paper, we present a method to reduce the file size of thermal sensor which can sense the temperature of a surface and outputs a two dimension gray scale image. In our evaluation result, we can reduce the file size to $50\%$ less than JPEG when there is $0.5\%$ of distortion, and up to $93\%$ less when there is $2\%$ of distortion. | |||

| \end{abstract} | |||

+ 18

- 3

trunk/01Introduction.tex

View File

| @ -1,14 +1,29 @@ | |||

| \section{Introduction} | |||

| \label{sec:introduction} | |||

| Walking exercises the nervous, cardiovascular, pulmonary, musculoskeletal and hematologic systems because it requires more oxygen to contract the muscles. Hence, {\it gait velocity}, or called {\it walking speed}~\cite{Middleton2015}, has become a valid and important metric for senior populations~\cite{Middleton2015,studenski2011,Studenski03}. | |||

| In a IoT environment, there are many devices will periodically transmit data. Some sensor is use for avoid accidents, so they will have very high sensing frequency. However, most of the data are useless. Like a temperature sensor on a gas stove, the temperature value is the same as the value from air conditioner and does not change very frequently, but it will have dramatically difference when we are cooking. We can simply make a threshold that when temperature is higher or lower than some degrees, the data will be transmitted, and drop the data that we don't interest. This is a very easy solution if we only have a few devices, but when we have hundreds or thousands devices, it is impossible to manually configure all devices, and the setting may need to change in the winter and summer, or different location. Hence, a framework to select useful data is important. | |||

| In 2011, Studenski et al~\cite{studenski2011} published a study that tracked gait velocity of over 34,000 seniors from 6 to 21 years in US. The study found that predicted survival rate based on age, sex, and gait velocity was as accurate as predicted based on age, sex, chronic conditions, smoking history, blood pressure, body mass index, and hospitalization. Consequently, it has motivated the industrial and academia communities to develop the methodology to track and assess the risk based on gait velocity. The following years have led to many papers that point to the importance of gait velocity as a predictor of degradation and exacerbation events associated with various chronic diseases including heart failure, COPD, kidney failure, stroke, etc~\cite{Studenski03, pulignano2016, Konthoraxjnl2015, kutner2015}. | |||

| On Raspberry Pi 3, while it is idling and turning off WiFi, it will consume 240mA and while uploading data at 24Mbit/s, it will consume 400mA. If we sent $640 \times 480$ pixels heat map images in png format (average 45KB) in 10Hz, it will consume about 264mA. | |||

| In the US, there are 13 million seniors who live alone at home~\cite{profile2015}. Gait velocity and stride length are particularly important in this case since they provide an assessment of fall risk, the ability to perform daily activities such as bathing and eating, and hence the potential for being independent. Assessment of gait velocity is recommended to instruct the subjects to walk back and forth in a 5, 8 or 10 meter walkway. Similar results were found in a study comparing a 3 meter walk test to the GAITRite electronic walkway in individuals with chronic stroke~\cite{Peters2013}. | |||

| The above approaches are conducted either at the clinical institutes or designated locations. They are recommended by the physicians but are required to be conducted at limited time and location. Consequently, it is difficult to observe the change in long term. It is desirable for the elderly, their family members, and physicians to monitor gait velocity for the elderly all the time at any location. However, the assessment should take into account several factors, including accuracy, privacy, portability, robustness, and applicability. | |||

| Shih and his colleagues~\cite{Shih17b} proposed a sensing system to be installed at home or nursing institute without revealing privacy and not using wearable devices. Given the proposed method, one may deploy several thermal sensors in his/her apartments as shown in Figure~\ref{fig:gaitVelocitySmartHome}. In this example, numbers of thermal sensors are deployed to increase the coverage of the sensing signals. In large spaces such as living room, there will be more than one sensor in one space; in small spaces such as corridor, there can be only one sensor. One fundamental question to ask is how many sensors should be deployed and how these sensors work together seamlessly to provide accurate gait velocity measurement. | |||

| \begin{figure}[ht] | |||

| \centering | |||

| \includegraphics[trim={1cm 3cm 2cm 2cm},clip,width=1\columnwidth]{figures/ThermalAtHome.pdf} | |||

| \caption{Gait Velocity Measurement at Smart Homes} | |||

| \label{fig:gaitVelocitySmartHome} | |||

| \end{figure} | |||

| In a IoT environment, many devices will periodically transmit data. Some sensor is use for avoid accidents, so they will have very high sensing frequency. However, most of the data are redundant. Like a temperature sensor on a gas stove, the temperature value is the same as the value from air conditioner and does not change very frequently, but it will have dramatically difference when we are cooking. We can simply make a threshold that when temperature is higher or lower than some degrees, the data will be transmitted, and drop the data that we don't interest. This is a very easy solution if we only have a few devices, but when we have hundreds or thousands devices, it is impossible to manually configure all devices, and the setting may need to change in the winter and summer, or different location. | |||

| In this paper, we study the data from Panasonic Grid-EYE, a $8 \times 8$ pixels infrared array sensor, and FLIR ONE PRO, a $480 \times 640$ pixels thermal camera. Both are setting on ceiling and taking a video of a person walking under the camera. | |||

| {\bf Contribution} The contribution of this work is to present a framework for user to choose either the bit-rate or the error rate of the video. By the method we proposed, the size of file can reduce more than $50\%$ compare to JPEG image when both have $0.5\% (0.18^\circ C)$ of root-mean-square error. | |||

| In Figure~\ref{fig:gaitVelocitySmartHome}, there are fifteen thermal sensor in a house. If they are Panasonic Grid-EYE, it will have 2 bytes per pixel, 64 pixels per frame, 10 frames per second, and total need 1.7GB storage space per day. If they are FLIR ONE PRO, it can only generate 5 frames per second but needs about 45KB per frame, and it will need 291.6GB everyday. | |||

| {\bf Contribution} The target of our work is to compress the thermal image retrieved from FLIR ONE PRO to targeted data size and keep the quality of data. Nearby pixels in a thermal image mostly have similar value, so we can easily separate an image into several regions and use its average value to represent it wont cause too much error. By the method we proposed, the size of file can reduce more than $50\%$ compare to JPEG image when both have $0.5\% (0.18^\circ C)$ of root-mean-square error. | |||

| The remaining of this paper is organized as follow. Section~\ref{sec:bk_related} presents related works and background for developing the methods. Section~\ref{sec:design} presents the system architecture, challenges, and the developed mechanisms. Section~\ref{sec:eval} presents the evaluation results of proposed mechanism and Section~\ref{sec:conclusion} summaries our works. | |||

+ 11

- 6

trunk/02Background.tex

View File

+ 31

- 17

trunk/03Design.tex

View File

+ 12

- 9

trunk/04Evaluation.tex

View File

+ 58

- 30

trunk/Main.aux

View File

| @ -1,42 +1,70 @@ | |||

| \relax | |||

| \abx@aux@refcontext{none/global//global/global} | |||

| \abx@aux@cite{Middleton2015} | |||

| \abx@aux@segm{0}{0}{Middleton2015} | |||

| \abx@aux@segm{0}{0}{Middleton2015} | |||

| \abx@aux@cite{studenski2011} | |||

| \abx@aux@segm{0}{0}{studenski2011} | |||

| \abx@aux@cite{Studenski03} | |||

| \abx@aux@segm{0}{0}{Studenski03} | |||

| \abx@aux@segm{0}{0}{studenski2011} | |||

| \abx@aux@segm{0}{0}{Studenski03} | |||

| \abx@aux@cite{pulignano2016} | |||

| \abx@aux@segm{0}{0}{pulignano2016} | |||

| \abx@aux@cite{Konthoraxjnl2015} | |||

| \abx@aux@segm{0}{0}{Konthoraxjnl2015} | |||

| \abx@aux@cite{kutner2015} | |||

| \abx@aux@segm{0}{0}{kutner2015} | |||

| \abx@aux@cite{profile2015} | |||

| \abx@aux@segm{0}{0}{profile2015} | |||

| \abx@aux@cite{Peters2013} | |||

| \abx@aux@segm{0}{0}{Peters2013} | |||

| \@writefile{toc}{\boolfalse {citerequest}\boolfalse {citetracker}\boolfalse {pagetracker}\boolfalse {backtracker}\relax } | |||

| \@writefile{lof}{\boolfalse {citerequest}\boolfalse {citetracker}\boolfalse {pagetracker}\boolfalse {backtracker}\relax } | |||

| \@writefile{lot}{\boolfalse {citerequest}\boolfalse {citetracker}\boolfalse {pagetracker}\boolfalse {backtracker}\relax } | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {section}{\numberline {I}Introduction}{1}} | |||

| \newlabel{sec:introduction}{{I}{1}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {section}{\numberline {II}Background and Related Works}{1}} | |||

| \newlabel{sec:bk_related}{{II}{1}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {subsection}{\numberline {II-A}Panasonic Grid-EYE Thermal Sensor}{1}} | |||

| \@writefile{lof}{\defcounter {refsection}{0}\relax }\@writefile{lof}{\contentsline {figure}{\numberline {1}{\ignorespaces Walking under a Grid-EYE sensor}}{1}} | |||

| \newlabel{fig:GridEye}{{1}{1}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {subsection}{\numberline {II-B}Simple Data Compressing}{1}} | |||

| \abx@aux@cite{Shih17b} | |||

| \abx@aux@segm{0}{0}{Shih17b} | |||

| \@writefile{lof}{\defcounter {refsection}{0}\relax }\@writefile{lof}{\contentsline {figure}{\numberline {1}{\ignorespaces Gait Velocity Measurement at Smart Homes}}{3}} | |||

| \newlabel{fig:gaitVelocitySmartHome}{{1}{3}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {section}{\numberline {II}Background and Related Works}{4}} | |||

| \newlabel{sec:bk_related}{{II}{4}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {subsection}{\numberline {\unhbox \voidb@x \hbox {II-A}}Panasonic Grid-EYE Thermal Sensor}{4}} | |||

| \@writefile{lof}{\defcounter {refsection}{0}\relax }\@writefile{lof}{\contentsline {figure}{\numberline {2}{\ignorespaces Walking under a Grid-EYE sensor}}{4}} | |||

| \newlabel{fig:GridEye}{{2}{4}} | |||

| \abx@aux@segm{0}{0}{Shih17b} | |||

| \abx@aux@segm{0}{0}{Shih17b} | |||

| \abx@aux@cite{guo2011simple} | |||

| \abx@aux@segm{0}{0}{guo2011simple} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {subsubsection}{\numberline {II-B.1}Huffman Coding}{2}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {subsubsection}{\numberline {II-B.2}Z-score Threshold}{2}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {subsubsection}{\numberline {II-B.3}Gaussian Function Fitting}{2}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {subsection}{\numberline {II-C}FLIR ONE PRO}{2}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {section}{\numberline {III}Data Size Decision Framework}{2}} | |||

| \newlabel{sec:design}{{III}{2}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {subsection}{\numberline {III-A}Heuristic Data Resolution Determination}{2}} | |||

| \@writefile{lof}{\defcounter {refsection}{0}\relax }\@writefile{lof}{\contentsline {figure}{\numberline {2}{\ignorespaces Region separate by CFG}}{2}} | |||

| \newlabel{fig:ContextFreeString}{{2}{2}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {subsection}{\numberline {III-B}Data Structure and Region Selection Algorithm}{2}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {section}{\numberline {IV}Performance Evaluation}{3}} | |||

| \newlabel{sec:eval}{{IV}{3}} | |||

| \@writefile{lof}{\defcounter {refsection}{0}\relax }\@writefile{lof}{\contentsline {figure}{\numberline {3}{\ignorespaces PNG image, size = 46KB}}{3}} | |||

| \newlabel{fig:pngImage}{{3}{3}} | |||

| \newlabel{sec:conclusion}{{V}{3}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {section}{\numberline {V}Conclusion}{3}} | |||

| \@writefile{lof}{\defcounter {refsection}{0}\relax }\@writefile{lof}{\contentsline {figure}{\numberline {4}{\ignorespaces 4KB Image by Proposed Method}}{3}} | |||

| \newlabel{fig:4KMy}{{4}{3}} | |||

| \@writefile{lof}{\defcounter {refsection}{0}\relax }\@writefile{lof}{\contentsline {figure}{\numberline {5}{\ignorespaces 4KB Image by JPEG}}{3}} | |||

| \newlabel{fig:4KJpeg}{{5}{3}} | |||

| \@writefile{loa}{\defcounter {refsection}{0}\relax }\@writefile{loa}{\contentsline {algorithm}{\numberline {1}{\ignorespaces Segment Tree Preprocess}}{4}} | |||

| \newlabel{code:SegmentTreePreprocess}{{1}{4}} | |||

| \@writefile{loa}{\defcounter {refsection}{0}\relax }\@writefile{loa}{\contentsline {algorithm}{\numberline {2}{\ignorespaces Region Selection}}{4}} | |||

| \newlabel{code:RegionSelection}{{2}{4}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {subsection}{\numberline {\unhbox \voidb@x \hbox {II-B}}FLIR ONE PRO}{5}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {subsection}{\numberline {\unhbox \voidb@x \hbox {II-C}}Raspberry Pi 3}{5}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {subsection}{\numberline {\unhbox \voidb@x \hbox {II-D}}Simple Data Compressing}{5}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {subsubsection}{\numberline {\unhbox \voidb@x \hbox {II-D}1}Huffman Coding}{5}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {subsubsection}{\numberline {\unhbox \voidb@x \hbox {II-D}2}Z-score Threshold}{5}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {subsubsection}{\numberline {\unhbox \voidb@x \hbox {II-D}3}Gaussian Function Fitting}{6}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {section}{\numberline {III}Data Size Decision Framework}{6}} | |||

| \newlabel{sec:design}{{III}{6}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {subsection}{\numberline {\unhbox \voidb@x \hbox {III-A}}Region Represent Grammar}{6}} | |||

| \@writefile{lof}{\defcounter {refsection}{0}\relax }\@writefile{lof}{\contentsline {figure}{\numberline {3}{\ignorespaces PNG image, size = 46KB}}{7}} | |||

| \newlabel{fig:pngImage}{{3}{7}} | |||

| \@writefile{lof}{\defcounter {refsection}{0}\relax }\@writefile{lof}{\contentsline {figure}{\numberline {4}{\ignorespaces Region separate by CFG}}{7}} | |||

| \newlabel{fig:SeparateImage}{{4}{7}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {subsection}{\numberline {\unhbox \voidb@x \hbox {III-B}}Data Structure and Region Selection Algorithm}{7}} | |||

| \@writefile{loa}{\defcounter {refsection}{0}\relax }\@writefile{loa}{\contentsline {algorithm}{\numberline {1}{\ignorespaces Segment Tree Preprocess}}{8}} | |||

| \newlabel{code:SegmentTreePreprocess}{{1}{8}} | |||

| \@writefile{loa}{\defcounter {refsection}{0}\relax }\@writefile{loa}{\contentsline {algorithm}{\numberline {2}{\ignorespaces Region Selection}}{8}} | |||

| \newlabel{code:RegionSelection}{{2}{8}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {section}{\numberline {IV}Performance Evaluation}{9}} | |||

| \newlabel{sec:eval}{{IV}{9}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {subsubsection}{\numberline {\unhbox \voidb@x \hbox {IV-}1}Date Structure Initialize}{9}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {subsubsection}{\numberline {\unhbox \voidb@x \hbox {IV-}2}Image Loading}{9}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {subsubsection}{\numberline {\unhbox \voidb@x \hbox {IV-}3}Region Separation}{9}} | |||

| \newlabel{sec:conclusion}{{V}{9}} | |||

| \@writefile{toc}{\defcounter {refsection}{0}\relax }\@writefile{toc}{\contentsline {section}{\numberline {V}Conclusion}{9}} | |||

| \@writefile{lof}{\defcounter {refsection}{0}\relax }\@writefile{lof}{\contentsline {figure}{\numberline {5}{\ignorespaces 4KB Image by Proposed Method}}{10}} | |||

| \newlabel{fig:4KMy}{{5}{10}} | |||

| \@writefile{lof}{\defcounter {refsection}{0}\relax }\@writefile{lof}{\contentsline {figure}{\numberline {6}{\ignorespaces 4KB Image by JPEG}}{11}} | |||

| \newlabel{fig:4KJpeg}{{6}{11}} | |||

| \@writefile{lof}{\defcounter {refsection}{0}\relax }\@writefile{lof}{\contentsline {figure}{\numberline {7}{\ignorespaces Proposed method and JPEG comparing}}{12}} | |||

| \newlabel{fig:compareToJpeg}{{7}{12}} | |||